Federated Datacubes Enriched with ML

The Challenge

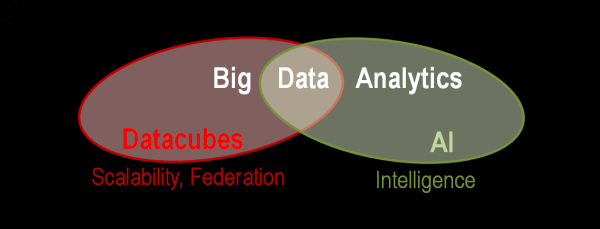

Datacubes provide spatio-temporal flexibility and scalability, Machine Learning (ML) provides deeper insights. However, nowadays both form separate silos where experts have to switch back and forth. Much better - read: easier and faster to use - is to offer both in a seamlessly integrated way.

Ideally, as with AI-Cubes™ there is a full integration into all optimization, distributed processing, etc. of the datacube engine.

Capability Demonstration

In the AI-Cube project the rasdaman datacube engine has been enhanced so that ML models can be invoked from within datacube queries.

Technically, the OGC-standardized WCPS geo datacube query language is extended via User-Defined Functions (UDFs) to invoke pytorch in the server for model application. Any region, any model can be passed to the server. From a user (i.e., query writer) perspective this external code appears like a regular query function.

The following example illustrates the principle how pretrained ML models, stored in the database, can be invoked (in red) as part of a general analytics query:

for in (Sentinel_2a),

$m in (CropModel)

return encode( nn.predict( $c[...], $m ), "tiff" )

A particular twist of the TU Berlin contributed RSVQA technique is the integration with natural language processing: A question is submitted along with Sentinel-1 and Sentinel-2 patches and the model, and the output again is natural language. The WCPS query has such a structure:

for $S1 in (S1_GRDH_IW),

$S2 in (S2_L2A),

$m in (MyModel)

let $patch := [ {space-time selection of 256x256 patch} ],

return

rsvqa.predict2(

$S1[subs2], $S2[subs2],

$m,

"Are there some airports?"

)

Next steps include further use case demos, in particular involving fusion, and building libraries of useful, high-accuracy models.

Publications

- P. Baumann, D. Misev: AI and Datacubes: Towards a Happy Marriage (poster). Proc. ESA Big Data from Space, Vienna, Austria, 2023

- P. Baumann, O. Campos, D. Misev: AI-Enabled Analysis-Ready Datacubes: Towards a Roadmap for More Human-Centric Services. Proc. IEEE Geoscience and Remote Sensing Society (IGARSS), July 2023, Pasadena, USA

- O.J. Campos Escobar, P. Baumann: Implementation Roadmap for Neural Networks in Array Databases. Proc. Computational Science and Computational Intelligence (CSCI), Las Vegas, USA, December 2022, doi: 10.1109/CSCI58124.2022.00024

User Benefit

- more powerful: seamless integration of ML into queries, including data fusion

- all server-side optimizations get automatically applied

- not tied to any particular type of models, libraries can be built ("Huggingface with datacubes")

- unlimited use of trained models, but no download: full IP protection